Is it the end of programming …?

In the early days, computer scientists wrote machine code containing raw instructions for computers, primarily to perform highly specific tasks. Throughout the 20th century, research in compiler theory led to the creation of high-level programming languages that use more accessible syntax to communicate instructions. Languages like ALGOL, COBOL, C, C++, Java, Python, and Go emerged, making programming less “machine-specific” and more “human-readable.” Every idea in classical computer science — from a database join algorithm to the mind-boggling Paxos consensus protocol — can now be expressed as a human-readable, comprehensible program.

In the early 1990s, AI was seen as a combination of algorithms used to make decisions to a certain extent. For example, algorithms in the supervised category, like Decision Trees and Random Forests, or in the unsupervised category, such as clustering and k-means, were more inclined toward probabilistic and statistical analysis of data. Although deep learning had its first methods in the late 1960s and 1970s, like backpropagation and convolutional neural networks, it remained in its infancy until the early 2000s. By 2011, the speed of GPUs had increased significantly, enabling the training of convolutional neural networks without layer-by-layer pre-training. With the increased computing speed, it became apparent that deep learning had significant advantages in terms of efficiency and speed.

The Generative Adversarial Network (GAN) was introduced in 2014 by Ian Goodfellow. With GAN, two neural networks learn by playing against each other in a game. This marked the beginning of understanding the power of training models with vast data and increasing computing power. GANs became a hit, producing unique human faces using images trained from across the internet. The fact that GANs could create a human face so convincingly that it could fool another human was both impressive and concerning.

The rise of AI and deep learning is a realization that these algorithms can now be “trained,” much like humans are trained in schools and colleges, and with the computing power at hand, this can happen in mere hours or days. Programming, as we know it, might become obsolete. The conventional idea of “writing a program” may be relegated to very specialized applications, with most software being replaced by AI systems that are “trained” rather than “programmed.” In situations where a “simple” program is needed, those programs will likely be generated by an AI rather than coded by hand.

No doubt, the earliest pioneers of computer science, emerging from the (relatively) primitive cave of electrical engineering, believed that all future computer scientists would need a deep understanding of semiconductors, binary arithmetic, and microprocessor design to understand software. Fast-forward to today, most software developers have almost no clue how a CPU actually works, let alone the physics underlying transistor design. The idea is simple: why reinvent the wheel when it already exists? Most programming languages nowadays have Standard Template Libraries (STLs), which means you don’t need to implement linked lists or binary trees yourself, or write Quicksort for sorting a list.

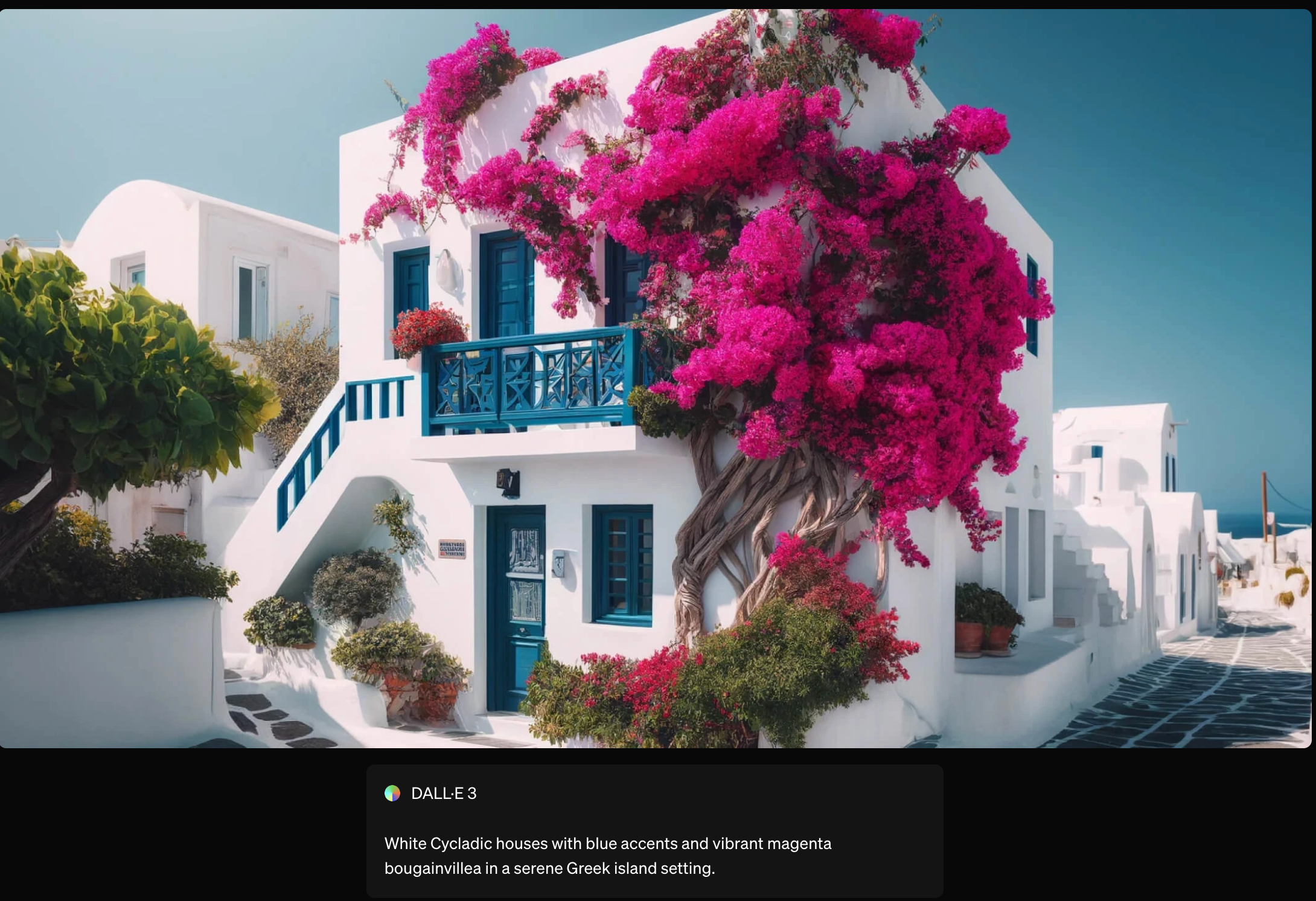

If you’re following AI trends, you’re likely aware of DALL-E, a deep learning model that creates images from text descriptions. Just 15 months after DALL-E’s release, DALL-E 2 was announced, offering more realistic and accurate representations with 4x more resolution. It’s easy to underestimate the power of increasingly large AI models. Building on this, DALL-E 3 further enhances the creative process by offering even more refined image generation, with improved coherence between text prompts and visual outputs.

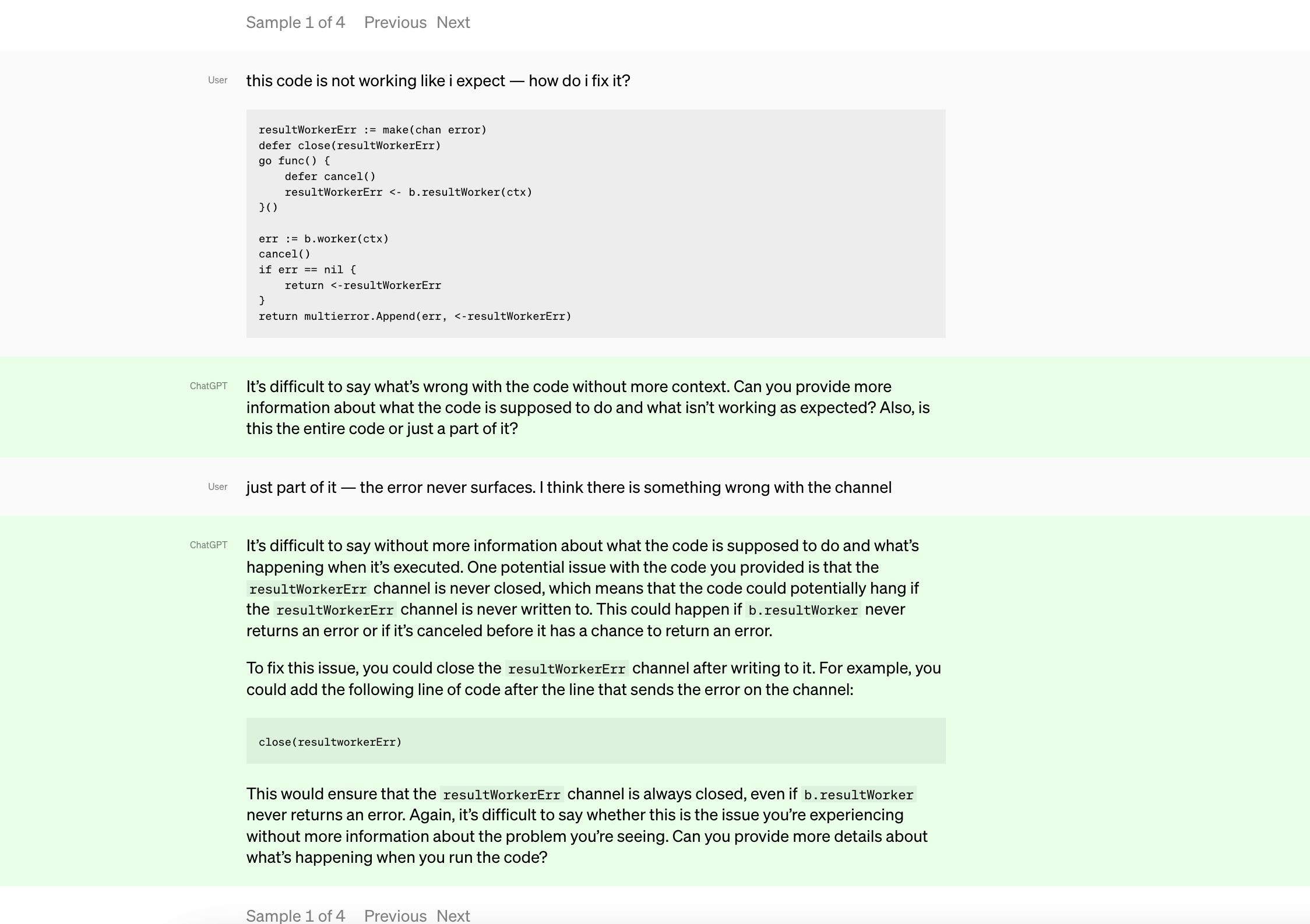

AI coding assistants such as CoPilot are only scratching the surface of what I am describing. It seems totally obvious to me that *of course *all programs in the future will ultimately be written by AIs, with humans relegated to, at best, a supervisory role. And what blew everyone’s mind was when openAI announced chatGPT a sibling model to instructGPT (and god knows how many different flavours of GPT’s they have in there) which is trained to follow an instruction in a prompt and provide a detailed response. The extent to which chatGPT is able to go, explain and respond is phenomenal. It has successfully passed a Google Coding Interview for Level 3 Engineer With $183K Salary, MBA and law exams of prestigious universities , medical licensing exams and what not. You will not believe this until you try pushing the limits of how a conversational bot surpasses limits of your expectations: here.

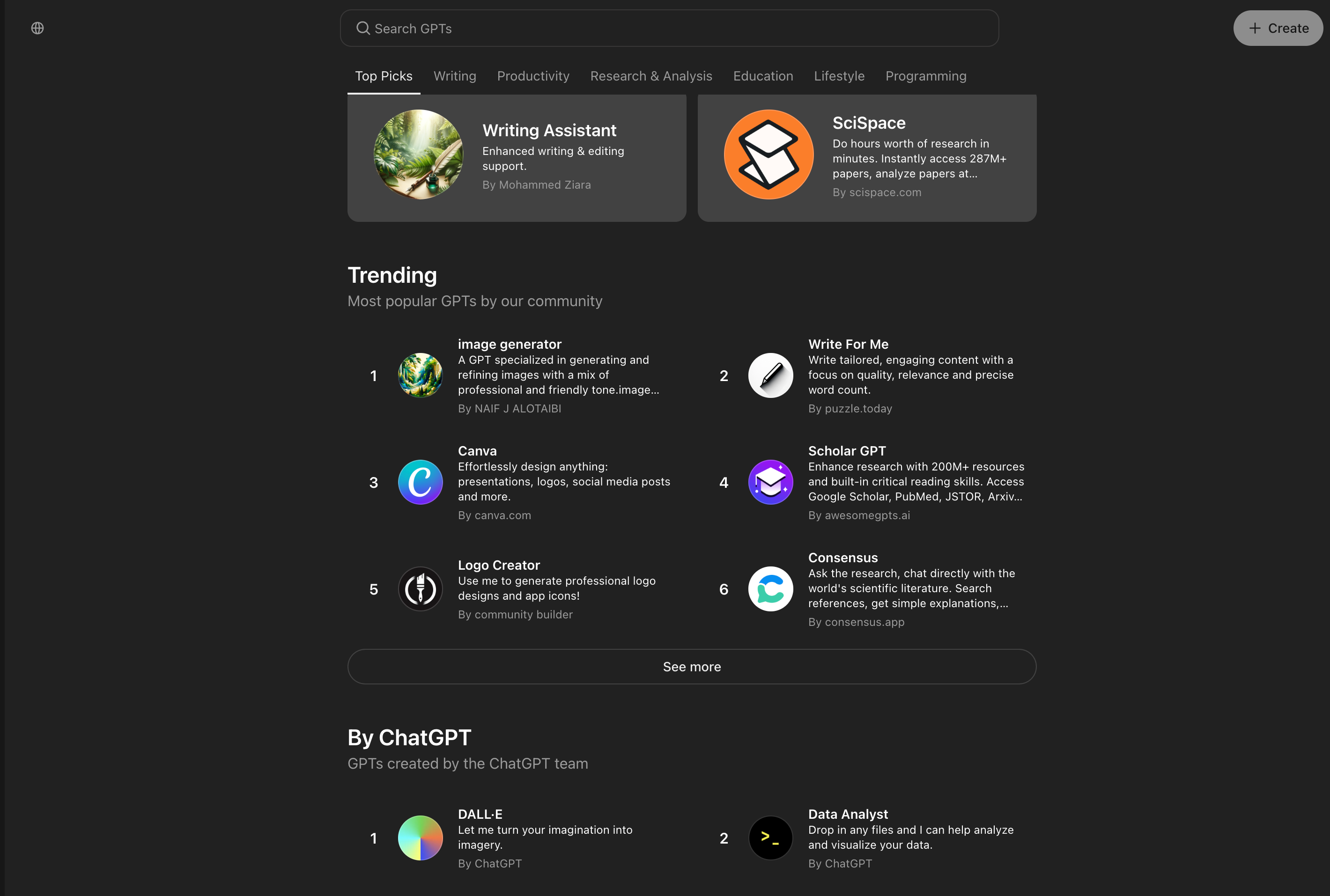

As the GPT technology has evolved, so too has its specialization across various domains. These specialized GPTs are not just powerful but tailored for specific tasks, enhancing efficiency and effectiveness in ways that general-purpose models like ChatGPT cannot. It’s like a GPT marketplace where you can pick and choose specialised GPT’s for your tailored work.Here are a few examples:

-

CodeGPT: A specialized version of GPT tailored for coding tasks, CodeGPT assists developers by generating code snippets, fixing bugs, and even suggesting optimizations in various programming languages. It is designed to understand the context of code and provide solutions that align with best practices.

-

MedGPT: Focused on the medical field, MedGPT is trained on vast amounts of medical literature, research papers, and clinical data. It assists healthcare professionals in diagnosing conditions, recommending treatments, and even predicting patient outcomes based on historical data. MedGPT represents a significant leap in applying AI to healthcare, making expert knowledge more accessible.

-

LawGPT: This variant is designed for legal professionals, capable of drafting contracts, analyzing legal documents, and providing insights based on case law. LawGPT reduces the time spent on mundane legal tasks and enhances the accuracy and depth of legal analysis, making it a valuable tool in law firms and corporate legal departments.

-

FinGPT: Tailored for the finance industry, FinGPT excels in analyzing market trends, predicting stock movements, and even generating trading strategies. It leverages real-time financial data and historical trends to offer insights that can be crucial for making informed investment decisions.

-

ArtGPT: A creative-focused GPT, ArtGPT is designed for artists and designers. It can generate artwork, design concepts, and even assist in creating music or literature. ArtGPT’s ability to mimic various artistic styles and innovate within those frameworks makes it a unique tool for creative professionals.

These specialized GPTs demonstrate the potential of AI to go beyond general-purpose use cases, diving deep into specific industries and domains. They are not just tools but partners that augment human expertise, making complex tasks more manageable and efficient.

GPT-based AI bots are no longer just conversational bots. There’s now AI for every category, a phenomenon being called the “GPT effect”:

-

Search: Bing Search + ChatGPT answer search queries.

-

Design: Genius AI design companion, Galileo for converting text (ideas) to design instantly.

-

Summarization: ArxivGPT (Chrome extension) summarizes Arxiv papers.

-

Image Generation & Avatar: OpenJourney (Stable Diffusion text-to-image model), DALL-E 2, and AI avatars trending on social media. DALL-E 3, integrated into ChatGPT Plus, allows users to engage visually within the conversation.

Expanding on this creative potential, OpenAI has introduced “Sora,” a cutting-edge video generation technique. Sora allows for the creation of high-quality, dynamic video content directly from text prompts, similar to how DALL-E works with images. This breakthrough technology opens new possibilities for storytelling, advertising, and digital content creation, enabling users to generate entire video sequences that match their textual descriptions. The ability to create video content with such ease and precision marks a significant leap forward in generative AI, pushing the boundaries of what is possible in multimedia production.

Infact about 90% of major Fortune 500 companies are using some flavour of chatGPT embedded or used in their application already. Google, Meta, Nvidia etc. have their own conversational assitants. In this race towards AI chatbots openAI has recently announced GPT-4 and later given developers freedom to create their own custom chatGPTs right from their playground website which can be shared, published and re-used, a marketplace for GPTs. The categories that have grown are so vast that it cannot be listed but this site captures, maintains and updates latest GPT based applications and use cases.

The engineers of the future , in a few keystrokes, fire up an instance of a four-quintillion-parameter model that already encodes the full extent of human knowledge (and then some), ready to be given any task required of the machine. The bulk of the intellectual work of getting the machine to do what one wants will be about coming up with the right examples, the right training data, and the right ways to evaluate the training process. Suitably powerful models capable of generalizing via few-shot learning will require only a few good examples of the task to be performed. Massive, human-curated datasets might no longer be necessary in most cases, and most people “training” an AI model will not be running gradient descent loops in PyTorch, or anything like it. They will be teaching by example, and the machine will do the rest.

Conclusion

This shift in the underlying definition of computing presents a huge opportunity, and this shift happened because of the huge data and being able to process them with the increasing computing power we have. We should accept the future of CS and evolve rather than knowing and doing nothing, this reminds me of the instances from the recent popular Netflix movie “Don’t Look Up!”

This blog has been written by Divyansh Sharma, working as a Software Development Engineer III at Sixt India.

References :